Create: Update:

CLIP + StyleGAN. Searching in StyleGAN latent space using description embedded with CLIP.

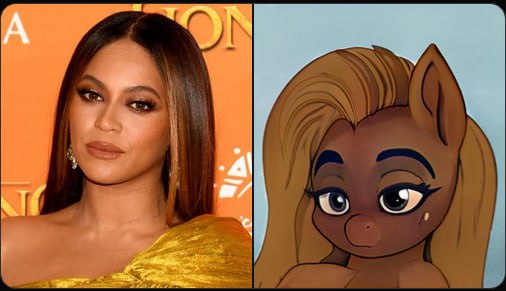

Queries: "A pony that looks like Beyonce", "... like Billie Eilish", ".. like Rihanna"

📐 The basic idea

Generate an image with StyleGAN and pass the image to CLIP for the loss against a CLIP text query representation. You then backprop through both networks and optimize a latent space in StyleGAN.

🤬 Drawbacks 1) it only works on text it knows 2) needs some cherry picking, only about 1/5 are really good.

Source twitt.

Queries: "A pony that looks like Beyonce", "... like Billie Eilish", ".. like Rihanna"

📐 The basic idea

Generate an image with StyleGAN and pass the image to CLIP for the loss against a CLIP text query representation. You then backprop through both networks and optimize a latent space in StyleGAN.

🤬 Drawbacks 1) it only works on text it knows 2) needs some cherry picking, only about 1/5 are really good.

Source twitt.

CLIP + StyleGAN. Searching in StyleGAN latent space using description embedded with CLIP.

Queries: "A pony that looks like Beyonce", "... like Billie Eilish", ".. like Rihanna"

📐 The basic idea

Generate an image with StyleGAN and pass the image to CLIP for the loss against a CLIP text query representation. You then backprop through both networks and optimize a latent space in StyleGAN.

🤬 Drawbacks 1) it only works on text it knows 2) needs some cherry picking, only about 1/5 are really good.

Source twitt.

Queries: "A pony that looks like Beyonce", "... like Billie Eilish", ".. like Rihanna"

📐 The basic idea

Generate an image with StyleGAN and pass the image to CLIP for the loss against a CLIP text query representation. You then backprop through both networks and optimize a latent space in StyleGAN.

🤬 Drawbacks 1) it only works on text it knows 2) needs some cherry picking, only about 1/5 are really good.

Source twitt.

>>Click here to continue<<

Gradient Dude